1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

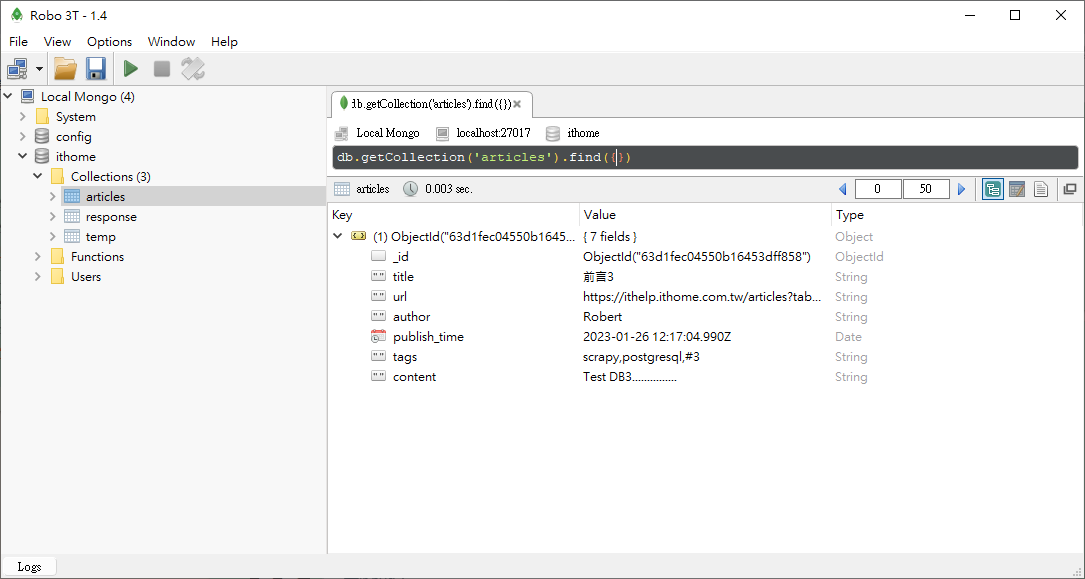

| from itemadapter import ItemAdapter

import psycopg2

from pymongo import MongoClient

from datetime import datetime

class IthomePipeline:

def process_item(self, item, spider):

return item

class MongoPipeline:

def open_spider(self, spider):

host = 'localhost'

dbname = 'ithome'

self.client = MongoClient('mongodb://%s:%s@%s:%s/' % (

'mongoadmin',

'mg123456',

'localhost',

'27017'

))

print('資料庫連線成功!')

self.db = self.client[dbname]

self.article_collection = self.db.articles

self.response_collection = self.db.response

def close_spider(self, spider):

self.client.close()

def process_item(self, item, spider):

article = {

'title': item.get('title'),

'url': item.get('url'),

'author': item.get('author'),

'publish_time': item.get('publish_time'),

'tags': item.get('tags'),

'content': item.get('content'),

'view_count': item.get('view_count')

}

doc = self.article_collection.find_one({'url': article['url']})

article['update_time'] = datetime.now()

if not doc:

article_id = self.article_collection.insert_one(article).inserted_id

else:

self.article_collection.update_one(

{'_id': doc['_id']},

{'$set': article}

)

article_id = doc['_id']

article_responses = item.get('responses')

for article_response in article_responses:

response = {

'_id': article_response['resp_id'],

'article_id': article_id,

'author': article_response['author'],

'publish_time': article_response['publish_time'],

'content': article_response['content'],

}

self.response_collection.update_one(

{'_id': response['_id']},

{'$set': response},

upsert=True

)

return item

|