command

install

Example

1st run

settings.py

- set item count = 150

- cache hold 3600s

1

2

3

4

5

6

7

|

CLOSESPIDER_ITEMCOUNT = 150

HTTPCACHE_ENABLED = True

HTTPCACHE_EXPIRATION_SECS = 3600

|

run

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

(myenv10_scrapy) D:\work\run\python_crawler\108-scrapy-practice\steam>scrapyrt

2023-01-10 16:21:47+0800 [-] Log opened.

2023-01-10 16:21:47+0800 [-] Site starting on 9080

2023-01-10 16:21:47+0800 [-] Starting factory <twisted.web.server.Site object at 0x000002329296FEB0>

2023-01-10 16:21:47+0800 [-] Running with reactor: AsyncioSelectorReactor.

start=0, total_count=2446

2 ==================

next offset_index=50

==================

start=50, total_count=2446

2023-01-10 16:51:08+0800 [-] "127.0.0.1" - - [10/Jan/2023:08:51:07 +0000] "GET /crawl.json?start_requests=true&spider_name=more_best HTTP/1.1" 200 46280 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

==================

start=0, total_count=2446

2 ==================

next offset_index=50

==================

start=50, total_count=2446

2023-01-10 16:51:19+0800 [-] "127.0.0.1" - - [10/Jan/2023:08:51:18 +0000] "GET /crawl.json?start_requests=true&spider_name=more_best HTTP/1.1" 200 46280 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

|

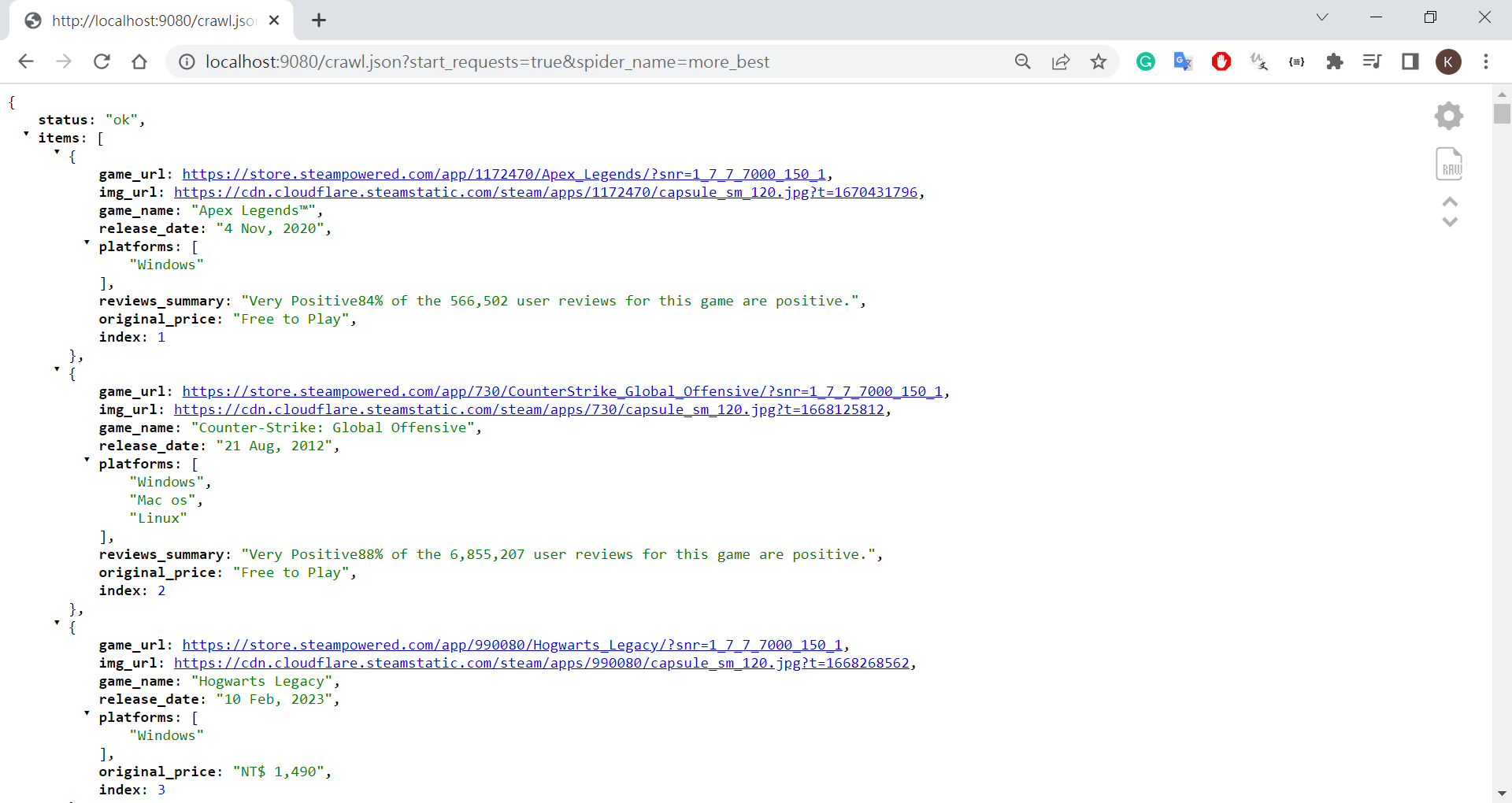

run chrome

http://localhost:9080

run chrome - trigger spider more_best

http://localhost:9080/crawl.json?start_requests=true&spider_name=more_best

Ref